Traditional AI systems were built to perform specific, narrowly defined tasks–think of classical computer vision or speech recognition models. These models excelled in a single domain but lacked the generality or flexibility to adapt to new problems without extensive retraining. Then came the era of Generative AI. Large Language Models like GPT-3 exhibited surprising “emergent” capabilities: they could translate, summarize, or code without those skills being explicitly programmed.

It seemed that with enough scale and diverse data, intelligence would simply emerge. A lot of progress made by the AI Labs is based on Rich Sutton’s bitter lesson:

The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin.

This has proven to be true as scaling both train and test-time compute has led to predictable improvements in AI capabilities across the board.

Yet, despite their remarkable adaptability, today’s LLMs have notable shortcomings. They often struggle with tasks requiring many sequential steps, such as sophisticated planning or decision making. Each step can introduce small errors that compound over time, limiting overall accuracy and reliability.

.jpg)

This surfaces the gap between AI capabilities and the integration of those capabilities into AI products. While the scaling argument holds true to capabilities, a well crafted AI product will have to be user first – data specific to the vertical domain, understanding user workflows, and various terminologies are key to easier user adoption.

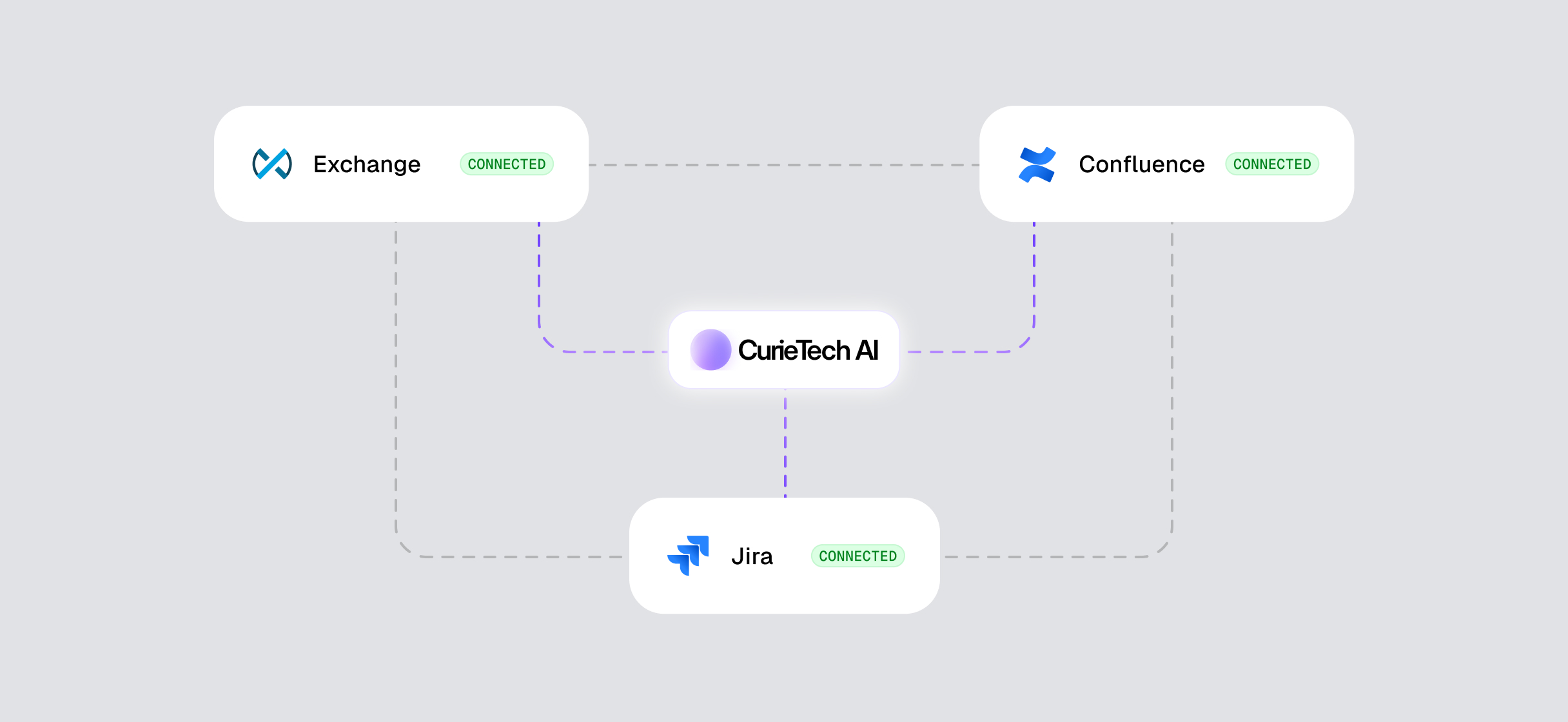

At CurieTech AI, we believe that the best path forward is to assemble AI capabilities like building blocks, combining large models with domain-specific modules and retrieval systems. This approach increases reliability and opens the door to continuous improvement. We note that increase in AI capabilities is not at odds with the product, it would in fact improve it!

However, this raises a question of how does one design, maintain and improve such a compound system. We think about this a lot and the answer lies in how complex modular systems – cars, computers, and even software -- were designed in the past. We need components that require concrete specifications, are verifiable, debuggable, and improvable.

Specifications

Natural language, while being very general and flexible, can lead to very ambiguous specifications. For building a robust module, knowing the input and outputs of that module become very crucial for evaluating and subsequently improving the module. Breaking the main task into sub-problems and defining the input-output requirements for each problem requires a lot of domain knowledge and innovations in the UX to get right. Current LLMs are trained to be answer engines that take in some default assumptions for missing details to generate a solution. Instead, we want an AI system which clarifies the intent of the user while being grounded in the domain use case.

Here's a concise example illustrating the difference between a traditional LLM-based answer engine and a desired intent-clarifying AI system that's grounded in domain-specific context:

Scenario:

A user requests:

"Can you summarize the recent customer reviews for our mobile app?"

Traditional LLM Answer (assumes defaults even with the ability to access all internal reviews of the mobile app):

"The recent reviews for your app are generally positive, with users praising ease of use and design. However, some customers mention occasional crashes and slow loading times."

Intent-Clarifying, Domain-Grounded AI Interaction

- AI: "Could you clarify which reviews to summarize? Should I focus on specific time frames, platforms (iOS or Android), or specific user segments?"

- User: "Summarize just the negative reviews from Android users over the past month."

- AI (clarifies further): "Would you prefer a brief sentiment summary, or a structured breakdown including specific recurring issues, device models, and app versions?"

- User: "Structured breakdown with recurring issues and device models."

Clearly Defined Input-Output after Clarification:

In the second case, the AI explicitly clarifies ambiguities in the initial natural-language query, resulting in clearly defined inputs and outputs grounded in the domain-specific context of mobile app analytics.

Verifiability

Ensuring verifiability in complex AI systems requires rigorous testing at both the individual module level and the overall system level. Achieving this becomes significantly more manageable by clearly defining concrete specifications and implementing comprehensive custom evaluations that extend beyond standard benchmarks. Additionally, creating robust debugging tools and methods to investigate module behavior becomes essential, especially when verification is challenging.

A fundamental practice is designing systems in a way that clearly attributes the root cause of failures to specific modules. This approach allows for meaningful insights, facilitating targeted improvements.

Consider an AI system designed for automated customer support. Each component within this system can have explicit input-output definitions and clear metrics for evaluation, as illustrated below:

By systematically benchmarking and evaluating each module, developers can effectively diagnose issues and attribute performance shortcomings to precise components. Clearly defined input-output expectations for each module enhance transparency, simplify debugging, and ultimately improve system reliability and user satisfaction.

Improvement

Once the problematic module is identified through clear specifications and targeted verification, improvements can be systematically approached using methods like prompting, fine-tuning, or modular decomposition. Choosing among these methods depends on factors such as the complexity of errors, the quality of available training data, and the desired speed of iteration.

For example, if the sentiment analysis module often misclassifies neutral user reviews as negative, the following iterative approach can be adopted:

- Prompt Adjustment: Experiment with explicit prompting ("Classify reviews carefully; only flag clearly negative reviews mentioning specific issues or strong negative emotions.") and evaluate improvements on test benchmarks.

- Fine-tuning: If prompting doesn't yield sufficient accuracy, fine-tune the sentiment analysis model with a curated dataset of neutrally-toned reviews, ensuring it recognizes subtle language nuances specific to app feedback.

- Modular Decomposition: Further, break sentiment classification into simpler sub-tasks such as emotion intensity detection, issue-based keyword spotting, and contextual user frustration analysis. Evaluate each independently and integrate successful subcomponents back into the main module.

This systematic, iterative experimentation—grounded in domain insights and clear specifications—is key to continuously improving the reliability and user satisfaction of AI-driven products.

Takeaways

We believe that though many foundational AI breakthroughs were initially emergent, the real progress towards robust, trustworthy AI will come from an intentional constructive design. We believe the last mile is not an annoyance but the core problem, and that vertical specific systems rather than models would help us create a delightful AI product for our users.

.jpg)

.jpg)

.jpg)